- Mar 20, 2019

-

-

Edward Yang authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18131 ghimport-source-id: 473dae70f6c236d317bec77d894310c0aa0376ec Stack from [ghstack](https://github.com/ezyang/ghstack ): * **#18131 Copy-edit CONTRIBUTING and update.** Signed-off-by:

Edward Z. Yang <ezyang@fb.com> Differential Revision: D14505049 fbshipit-source-id: 02aeae33c0049889243c56dd0d761487dac2351e

-

Ailing Zhang authored

Summary: fixes #18057 according to colesbury 's suggestion. Thanks! cc: ezyang Pull Request resolved: https://github.com/pytorch/pytorch/pull/18168 Differential Revision: D14520953 Pulled By: ailzhang fbshipit-source-id: 970e6cfb482d857a81721ec1d0ee4a4df84a0450

-

Elias Ellison authored

Summary: Further breakup test_misc.h. The remaining tests don't directly map to a jit file so I left them in test_misc. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18191 Differential Revision: D14533442 Pulled By: eellison fbshipit-source-id: 7f538ce0aea208b6b55a4716dfcf039548305041

-

- Mar 19, 2019

-

-

David Riazati authored

Summary: Documents the serialization format for `torch.jit.save`. Some of the info is copied from houseroad's internal doc. [Formatted Markdown](https://github.com/driazati/pytorch/blob/serial_docs/torch/csrc/jit/README.md) Also refactors the readme to have a heading hierarchy + table of contents Pull Request resolved: https://github.com/pytorch/pytorch/pull/17951 Differential Revision: D14531644 Pulled By: driazati fbshipit-source-id: cbcd9462054cc9f8a2f8cea2c98d8aba4e7d227c

-

Edward Yang authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18193 ghimport-source-id: 540859cf0b238a9832f45b3f4c2351e3343fc1a2 Stack from [ghstack](https://github.com/ezyang/ghstack ): * **#18193 Turn on Travis builds for ghstack PRs.** Signed-off-by:

Edward Z. Yang <ezyang@fb.com> Differential Revision: D14529945 fbshipit-source-id: 4476e996e311a04f2a997ca9b7c4cf2157dd6286

-

Michael Suo authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18195 ghimport-source-id: 05102cb115c6bd6d141f51905e20155bcd79a908 Stack from [ghstack](https://github.com/ezyang/ghstack): * **#18195 [build] do not throw when unicode is seen in pull request info** Differential Revision: D14529707 fbshipit-source-id: 2f6a31b01b3a9b044fd24be466cc5325b70929ad

-

Edward Yang authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18192 ghimport-source-id: 9523a09d7ec202ef08cf0ecdf48c42739ea6b0ce Stack from [ghstack](https://github.com/ezyang/ghstack ): * **#18192 Delete bugbear from Python 2 lint.** Signed-off-by:

Edward Z. Yang <ezyang@fb.com> Differential Revision: D14529240 fbshipit-source-id: 1a433b53dd38d1c455e8c0750d97c594ac51ef09

-

David Riazati authored

Summary: The type of each `initial_ivalue` is completely known at some point but that information is discarded by the time a call to it is emitted. This PR is kind of a hack, as a better (longer) solution, the method should know about the type of each initial value. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18156 Differential Revision: D14525768 Pulled By: driazati fbshipit-source-id: 52d53e9711a07a4551c988bd95fe997e654aa465

-

Tongzhou Wang authored

Summary: fixes https://github.com/pytorch/pytorch/issues/18078 Pull Request resolved: https://github.com/pytorch/pytorch/pull/18079 Reviewed By: ezyang Differential Revision: D14521192 Pulled By: zou3519 fbshipit-source-id: cec773a3a6f2c405a0d9701e213b7caf81649181

-

Edward Yang authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18138 ghimport-source-id: be62a71ef98714e6f168a00f84120f612363528e Stack from [ghstack](https://github.com/ezyang/ghstack ): * **#18138 Enable flake8-bugbear line length checking.** flake8-bugbear's line length checker (B950) which permits violations of up to 10% but specifies the "true" limit when you go over. I had to ignore a bunch of flake8-bugbear's other checks when I turned this on. They're good checks though (they're turned on in fbcode) and we should fix them eventually. Signed-off-by:

Edward Z. Yang <ezyang@fb.com> Reviewed By: salexspb Differential Revision: D14508678 fbshipit-source-id: 2610ecc0dd43cc0788d77f4d024ebd85b26b8d41

-

Michael Suo authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18146 ghimport-source-id: 4b061c27c5c44ef0d06066490ed16cab3d0c7a64 Stack from [ghstack](https://github.com/ezyang/ghstack): * **#18146 [jit] fix bug in alias analysis** We handled hasWriters() incorrectly in the case of wildcards. There's even a comment describing the correct behavior. Sad! Much thanks to t-vi for tracking this down and suggesting the fix! Differential Revision: D14524208 fbshipit-source-id: 8010b54257241bd64013a0d0a8b6e7d22d8c70af

-

vishwakftw authored

Summary: Changelog: - Incorporate a simple backend check in the linearSolveCheckInputs function in LinearAlgebraUtils.h Pull Request resolved: https://github.com/pytorch/pytorch/pull/18116 Differential Revision: D14504469 Pulled By: soumith fbshipit-source-id: 7402b6dbaa8d73048946613b806d54f68bcbd8f4

-

Hector Yuen authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18123 the motivation of this fix is to resolve things like: for(auto i = 0; i < N; i++) where N is bigger than int32 These instances of comparison were found by enabling -Wsign-compare There are way too many things to fix, so issuing this as a series of fixes The plan is to fix all these issues and then enable this flag into Caffe2 to catch future instances Reviewed By: ZolotukhinM Differential Revision: D14497094 fbshipit-source-id: bca3927a2188bd33a508fa503ba221c220cdaefe

-

Neta Zmora authored

Summary: The momentum buffer is initialized to the value of d_p, but the current code takes the long way to do this: 1. Create a buffer of zeros 2. Multiply the buffer by the momentum coefficient 3. Add d_p to the buffer All of these can be collapsed into a single step: 1. Create a clone of d_p Pull Request resolved: https://github.com/pytorch/pytorch/pull/18114 Differential Revision: D14509122 Pulled By: ezyang fbshipit-source-id: 4a79b896201d5ff20770b7ae790c244ba744edb8

-

Ailing Zhang authored

Summary: In aten we have a _fused_dropout implementation for CUDA case. As ngimel suggested if we discard it in JIT AD, it hurts performance. It doesn't seem ideal to include backend specific implementation in AD, but this is helpful to prevent performance regression atm. Pull Request resolved: https://github.com/pytorch/pytorch/pull/17756 Differential Revision: D14368999 Pulled By: ailzhang fbshipit-source-id: 9a371c5020f630e8f6e496849ec9772b6f196169

-

Neeraj Pradhan authored

Summary: Addresses #15738, using fritzo's suggestion. This adds a `torch._sample_dirichlet` method in `Distributions.cpp` and `Distributions.cu`. - For CPU, this leads to no perf hit since all we do is to promote the `alpha` to double when getting the gamma samples (the gamma sampler anyways uses `accscalar_t`(double for CPU)) and cast it back to float32 on return. - I have added an analogous method for CUDA as well, but the default sampler for CUDA uses scalar_t for efficiency, so I have kept it as that. With this, I do not see the bias towards 1 as reported in #15738 with `float32`, but there is a spurious mode at 0.5, as would be expected. Users would need to explicitly use `float64` for GPU to not see the spurious mode at 0.5. (EDIT: see note below, it appears that the bias issue is still there for certain builds). Added some tests and checked that there is no perf regression. My experience with C++ is very limited, so apologies in advance if I missed something basic. cc. ailzhang, fritzo, fmassa Pull Request resolved: https://github.com/pytorch/pytorch/pull/17488 Differential Revision: D14410301 Pulled By: ezyang fbshipit-source-id: 62b2f694b4642685eab06db96d74ce28e05c3992

-

Gregory Chanan authored

Summary: It's wrong and unused. Use one of the many other constructors instead :). Pull Request resolved: https://github.com/pytorch/pytorch/pull/18137 Differential Revision: D14508364 Pulled By: gchanan fbshipit-source-id: 19c6ff78ad9d9221d0874425edd02b78627c4ca7

-

Gregory Chanan authored

Summary: There are multiple backends for a device type, so we just kill this function. Also, kill an getNonVariableType instance which was also underspecified. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18135 Differential Revision: D14507474 Pulled By: gchanan fbshipit-source-id: fc791a76d4b851b23d09a070725f3838621eb13d

-

Gregory Chanan authored

Summary: This gets rid of 'aten_sparse' which was used at one time with legacy THS code, but is now only overloaded in native_parse.py. The way that 'aten_sparse' worked was wonky -- it extended all backends (default [CPU, CUDA]) to include sparse. But this is totally unnecessary; we already have the backends we need to generate for from type_method_definition_dispatch. codegen changes: https://github.com/gchanan/pytorch/blob/fc37c8e171b7ebd1b1755469cf6a146a2abedc13/diff.txt Pull Request resolved: https://github.com/pytorch/pytorch/pull/18144 Reviewed By: ezyang Differential Revision: D14511324 Pulled By: gchanan fbshipit-source-id: 8bb4ac4cf0985f8756790779a22bc229e18e8e7f

-

Bharat Raghunathan authored

Summary: Fix #16428 by correcting type of 'swap' from `float` to `bool` Pull Request resolved: https://github.com/pytorch/pytorch/pull/18115 Differential Revision: D14516615 Pulled By: ezyang fbshipit-source-id: c61a45d533f3a443edf3c31c1ef3d9742bf46d2b

-

Deepali Chourasia authored

Summary: Observed that when there is no GPU support available `workspace `sets `GetGpuPeerAccessPattern `to `[]` in https://github.com/pytorch/pytorch/blob/master/caffe2/python/workspace.py#L79 and this case is not handled in https://github.com/pytorch/pytorch/blob/master/caffe2/python/data_parallel_model.py#L1065. Pull Request resolved: https://github.com/pytorch/pytorch/pull/17974 Differential Revision: D14517066 Pulled By: ezyang fbshipit-source-id: 186911d95c07e9a55ab82a41d0c7c919e4281bb4

-

Lutz Roeder authored

Summary: Maratyszcza harouwu yinghai This is broken since #13065. `c_str()` returns a pointer that isn't permanent. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18109 Differential Revision: D14516622 Pulled By: ezyang fbshipit-source-id: 7113d92eac4f61479c4c7b323cf78cc8aa00b17e

-

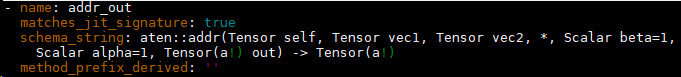

Junji Hashimoto authored

Summary: There are some line breaks in schema_string of Declarations.yaml. Is this valid yaml? I am reading yaml-spec. It seems that the “|” indicator or single/double quote is required to insert line-break. https://yaml.org/spec/1.2/spec.html  Could you increase line-width of yaml to avoid newline? Pull Request resolved: https://github.com/pytorch/pytorch/pull/18050 Differential Revision: D14516694 Pulled By: ezyang fbshipit-source-id: 1db9f3bf131b54a783d668de973915892603189e

-

svcscm authored

Reviewed By: yns88 fbshipit-source-id: eeeec4229e05916f2c17e525aee5ac4465ef52db

-

Zhang Dong authored

Summary: delete unnecessary file .gitkeep in /pytorch/tree/master/torch/csrc/nn Pull Request resolved: https://github.com/pytorch/pytorch/pull/18136 Differential Revision: D14516584 Pulled By: ezyang fbshipit-source-id: a7555693cb3df1c5e37fcd3ca9bb379a2258f2d1

-

David Riazati authored

Summary: Allows serialization/loading of attributes (`IValue`s of any type). * metadata (attribute name, type) is stored in the `model.json` * The binary format is a subset of the `pickle` module that supports the operations necessary for `IValue`s * Attributes are serialized in the order they are defined on a module to a list in a single `attributes` file, with submodule attributes coming first. This order directly matches the order attributes are listed in `model.json` * This can be inspected in Python with `pickle.load()` or with `pickletools` (PyTorch need not be installed for this to work) * A class is used to store a tensor's index into the tensor table of the model, so to unpickle the file you have to use a custom Unpickler: ```python class TensorID(object): def __setstate__(self, id): self.id = id class JitUnpickler(pickle.Unpickler): def find_class(self, module, name): if module == '__main__' and name == 'TensorID': return TensorID JitUnpickler(open("my_model/attributes.pkl", "rb")).load() ``` * pickle format: https://svn.python.org/projects/python/trunk/Lib/pickletools.py * It currently does not support/guarantee that anything saved out with `pickle` (i.e. if you edit `attributes` with `pickle` directly) instead of our tools will be imported correctly Also will fix #17683 and fix #16367 Followup Work: * document format / choice of pickle: #17951 * create an example * list specializations * int size specializations, large binputs * do a first pass over attributes to output only necessary `BINPUT` ops * attribute reassignment (e.g `self.my_attribute = new_value`) * `tensor.save("some_checkpoint.pkl")` support with tensors embedded in Pickle file Pull Request resolved: https://github.com/pytorch/pytorch/pull/17423 Differential Revision: D14470965 Pulled By: driazati fbshipit-source-id: 6a21a9939efdbe59b4bc57fd31d6d630bab5297e

-

- Mar 18, 2019

-

-

Jongsoo Park authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18141 VLEN should've been 32 Reviewed By: jianyuh Differential Revision: D14510780 fbshipit-source-id: ddf12746e1c69677a268432432ddb088cc210084

-

svcscm authored

Reviewed By: yns88 fbshipit-source-id: ed297c07c681f5f45d3f99edf48680015ca5b138

-

Vishwak Srinivasan authored

Summary: Changelog: - Renames `gesv` to `solve` to remain consistent with `cholesky_solve`. - Rename all tests, fix callsites - Create a tentative alias for `solve` under the name `gesv`, and add a deprecated warning to not promote usage. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18060 Differential Revision: D14503117 Pulled By: zou3519 fbshipit-source-id: 99c16d94e5970a19d7584b5915f051c030d49ff5

-

James Reed authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18107 Pull Request resolved: https://github.com/pytorch/translate/pull/396 also: 1. fix issues with OptionalType not having a createWithContainedType (PyTorch diff) 2. Delete tests for ONNX full beam search export (nobody is using it and it just makes things harder. Currently ONNX doesn't support `_unwrap_optional`) Reviewed By: jmp84 Differential Revision: D14483771 fbshipit-source-id: 0e37ef1cb5a16d03a535eef808b0488b98802128

-

Narine Kokhlikyan authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17240 Added circular padding in addition to zero padding to Conv1D, Conv2D and Conv3D based on the solution suggested in: https://github.com/pytorch/pytorch/issues/3858 Reviewed By: ezyang Differential Revision: D14126416 fbshipit-source-id: a2f1587503ee0cfff98d5cb0d5b0a600ef8aaeb4

-

Thomas Viehmann authored

Summary: ...because gcc will have failures with very strange error messages if you do. This affects people with Debian/Ubuntu-provided NVCC, the PR should not change anything for anyone else. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18127 Differential Revision: D14504386 Pulled By: soumith fbshipit-source-id: 1aea168723cdc71cdcfffb3193ee116108ae755e

-

Michael Suo authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18121 ghimport-source-id: 70c273bfbcb68f7b25cf87f5614c662960864758 Stack from [ghstack](https://github.com/ezyang/ghstack): * **#18121 [jit] fix double free in test_jit** These definitions used to be in anonymous namespace so they weren't exported from the translation unit. #18071 put those in a `test` namespace so I guess they were getting their destructors called twice on exit somehow. Making them static again fixes the problem. Reviewed By: ezyang Differential Revision: D14498349 fbshipit-source-id: f969781695dcbebdfcfce667fce5b986222a373e

-

Huitong Qiu authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18059 Replace resize_dim() with set_sizes_and_strides() in `THTensor_(squeeze)` in aten/src/TH/generic/THTensor.cpp and `THCTensor_(squeeze)` in aten/src/THC/generic/THCTensor.cpp Reviewed By: ezyang Differential Revision: D14471066 fbshipit-source-id: 1c8c412ff09246c4df6843736e3bf0279bfadea8

-

Tongzhou Wang authored

Summary: sphinx doesn't understand hyphen. it does not merge the two halves together in html. Pull Request resolved: https://github.com/pytorch/pytorch/pull/18118 Differential Revision: D14498012 Pulled By: mrshenli fbshipit-source-id: d6f4cfddc0a8e3a8f91578da43c26ca9c6fff3ce

-

- Mar 17, 2019

-

-

Gregory Chanan authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18073 ghimport-source-id: f4dadebafa0423c4c5a0e46c15b38129402d830a Stack: * #18072 properly device_guard IndexTensor and BoolTensor. * **#18073 Change one_hot from IndexTensor to Tensor.** There is no codegen change. Reviewed By: ezyang Differential Revision: D14485248 fbshipit-source-id: ee2ba8e5dcbbbaf0214a026c8e7ed4e6712becb0

-

Gregory Chanan authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18072 ghimport-source-id: 9653731602c72f299e095dd50e3afe6bcc8b01d6 Stack: * **#18072 properly device_guard IndexTensor and BoolTensor.** * #18073 Change one_hot from IndexTensor to Tensor. Currently IndexTensor and BoolTensors do not have device_guards applied to them. This is bad in the case where the only tensor(s) are IndexTensors or BoolTensors, because no device guard is present. The only case this currently happens is with one_hot which ends up not mattering because of the way the implementation is written. But I wanted to make sure we are covered here. Reviewed By: ezyang Differential Revision: D14485249 fbshipit-source-id: e57b28086fa1ad2fdd248bb1220e8a2e42da03e1

-

Michael Suo authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18093 ghimport-source-id: 021adc52aa7bfe5fff74531c76a8cd28cab30b2a Stack: * **#18093 [jit] fix corner case for optional aliasing** Occasionally the compiler can insert constant Nones to make types line up. In that case, don't try to make a pointer from the optional type to None, since we know statically that None won't be mutated or whatever. Reviewed By: shannonzhu Differential Revision: D14493004 fbshipit-source-id: 6564065f39d99ee5af664f3a0fe235892973d9be

-

- Mar 16, 2019

-

-

Jianyu Huang authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18089 Typo fix for the fully connected layer documentation. Reviewed By: jspark1105 Differential Revision: D14488632 fbshipit-source-id: ca0271ca0250c1d653ed7f250e8588f7b2ce1056

-

Duc Ngo authored

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18040 Add flag to fails if float point exceptions is detected in operator runs Sample exception Exception [enforce fail at operator.h:837] !std::fetestexcept(FE_DIVBYZERO). Division by zero floating point exception (FE_DIVBYZERO) reported. Error from operator: input: "1" input: "0" output: "out" name: "" type: "Div" Reviewed By: jspark1105 Differential Revision: D14467731 fbshipit-source-id: fad030b1d619a5a661ff2114edb947e4562cecdd

-